-

Products

Managed Containers

Fully managed container hosting with OS-level monitoring, autoscaling, and load balancing.

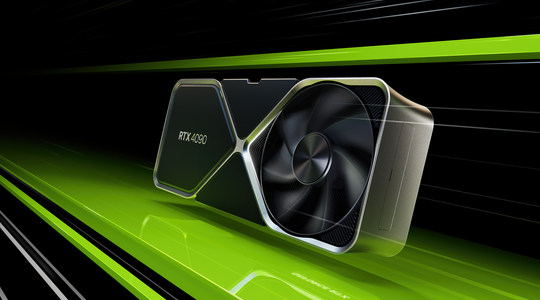

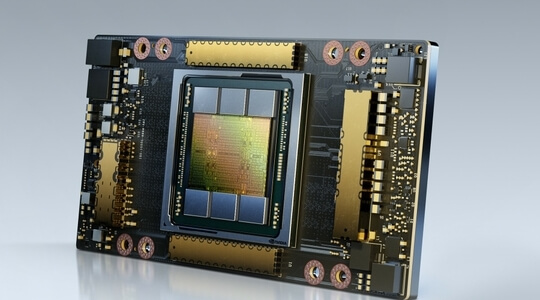

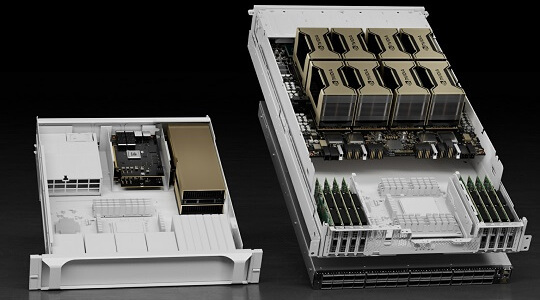

GPU Cloud

The industry's most cost-effective virtual machine infrastructure for deep learning, AI and rendering.

CPU Cloud

The latest Intel Xeon and AMD EPYC processors for scientific computing and HPC workloads.

Compare

Compare our GPU cloud offerings

-

Company

- Contact

- Hosts